Recently, I’ve been seeing more posts on multimodal AI recently - its becoming a popular topic. GPT4 has integrated it into its interface, while Hugging Face is increasingly releasing more multimodal models.

Multimodal holds great potential and fascination for a simple reason. It's a version of how we humans perceive, remember, and think about the world around us.

Our experience of the world is multimodal – we see objects, hear sounds, feel textures, smell scents, and taste flavors, and then we make decisions.

I’ll be discussing:

What is Multimodal AI?

What sort of outputs does it generate?

What are some applications?

What are common Advantages and challenges?

In this article, I wanted to explain the basics, and what aspects that you can focus on right away.

So how we define Multimodal AI?

Before we dive into what is multimodal AI, let's decode what 'modality' means.

Humans are a blend of various sensory inputs – we're essentially multimodal creatures. We've got touch, sight, hearing, smell – all different channels of absorbing information.

You can refer to each source or form of information as a modality. Various mediums can convey information. These include speech, video, text, and so on. There are also various sensors like radar, infrared, accelerometers, and more. Each sensor represents a modality.

Modality has a pretty broad definition. For example, we can actually consider two different languages as two modalities. We can see even datasets collected under different conditions as two modalities.

Let’s break it down the types of AI modalities.

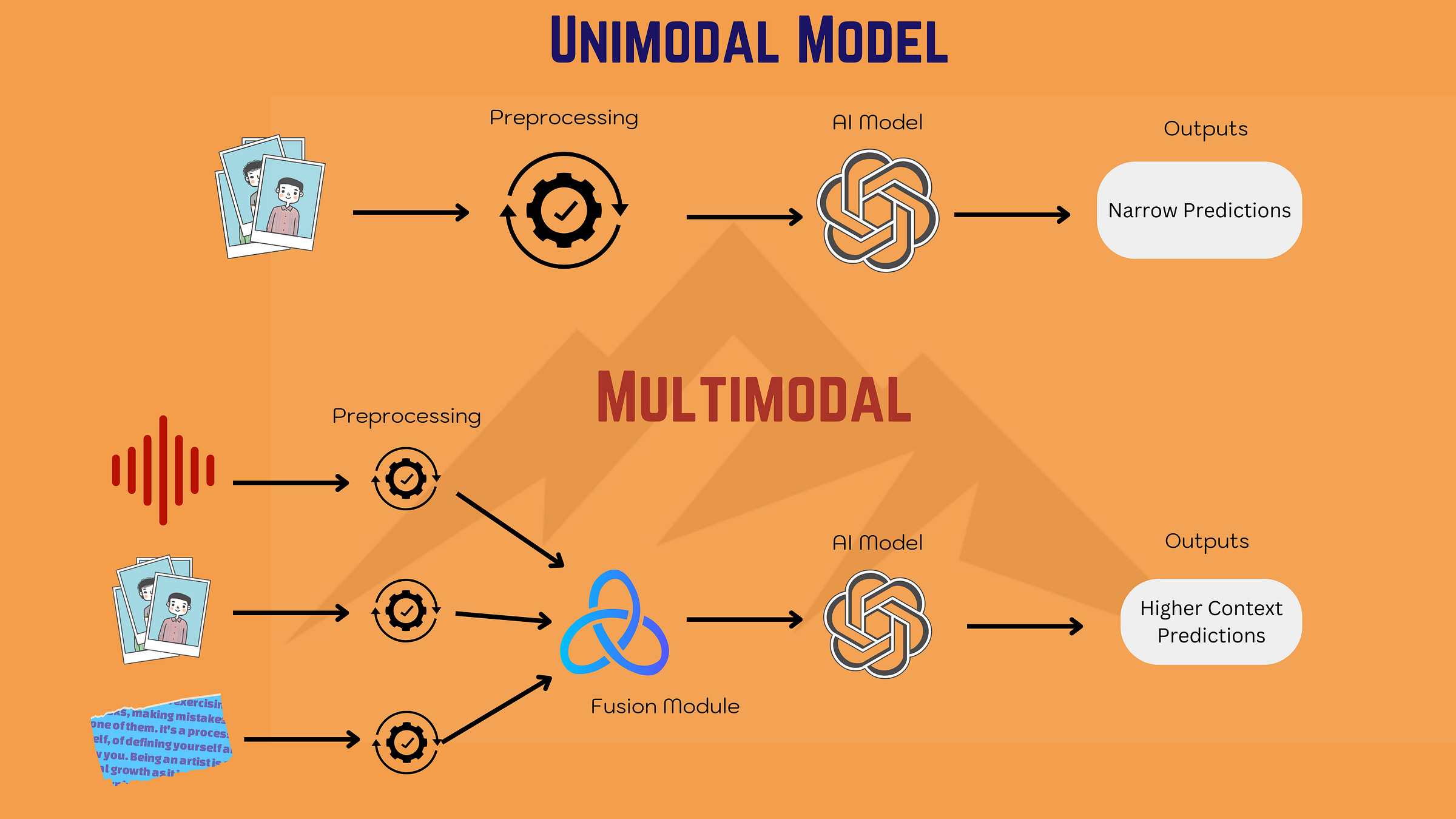

Unimodal AI: A single source Unimodal AI is akin to an expert in a singular discipline. It's designed to process one type of data input — be it text, image, or sound. Basic ML models are usually unimodal.

For instance, a unimodal text-based AI uses natural language processing (NLP). It analyzes and interprets textual data. But, it lacks the capability to process visual or auditory information. In its domain, it operates with high efficiency, but its context has limitations.

Bimodal AI: two sources. Bimodal AI combines two different types of data modalities. It fuses two or more distinct data modalities (inputs) to do inference. The term "bimodal" comes from combining two distinct inputs into one system.

This requires a fusion module. It means merging features after they have been preprocessed independently. It's akin to cooking components separately and then combining them.

In an image captioning application, a bimodal AI uses computer vision to interpret the image. For example, it does this by using computer vision. It also uses NLP to generate relevant textual descriptions. This creates a more comprehensive data interpretation.

Multimodal AI: A Multi-Data Type Convergence

Multimodal AI uses different data types (text, images, audio) to make predictions. It also extracts features. Each modality adds unique context. This involves more than one fusion module that handle and interpret preprocessed data.

Think of it like making a smoothie. Each ingredient (input) has its own flavor (data type), which must be cleaned before use.

They then go through a blender (deep learning module) to extract their predictions (inference). With fusion, the different flavors combine to create a delicious smoothie (final predictions). This fusion leverages the strengths of different data types for better prediction or generative tasks.

When we discuss data from different modalities, it's important to note that, while they each have unique characteristics (e.g., text data is discrete, while image and video data are continuous), there is also a certain level of association between them.

For instance, a photo might have text associated with it (like a caption or tags), which provides context or description. Similarly, in a video, the visual elements (what you see) are often closely associated with the audio elements (what you hear).

These relationships and associations allow multimodal models to interpret complex data holistically. Modeling relationships and labeling is extremely crucial for business and teams building these models. Not understanding the relationship between modalities and good labeling will result in failure. It may be even better just to use a simple unimodal model until the data and relationships are understood.

Understanding these is important if you want predictions and generative outputs.

What sort of responses (outputs) can multimodal AI have?

Data quality is important for modalities. But you need to determine the output of the multimodal model. Not all outputs of a multimodal model can be text - it can text, images, audio, etc. In more advanced cases it can even be a generative output.

Single Modal Output: This is a straightforward type of output. It's an output in single modality and may have one or more modalities (image, text, audio) as inputs. For example, if you ask a text-based AI a question, the output will be a text response. In the case of an video model? Modal inputs may be the image and audio data, while the output might be text summarizing the video.

Multimodal Output: In this case, the AI can produce outputs of different modalities. For example, and model might take a text input and generate an image and a text summary. Or it might analyze a video (which combines visual and audio data) and provide a text summary, illustrated picture, and an audio summary. These may even be the same data type, but different modalities - a text summary and a text description for example.

Generative Output: Multimodal models can also be use generative models. It is quite possible for them create new content or data, which could be in any modality. For instance, a generative AI could write a new piece of music, create a realistic image, or even generate a webpage. This output is 'generative' because it's not just analyzing or categorizing existing data but actually creating something new. This can be in a single modality (like text or images) or multimodal (like a video with both visual and audio components).

Data science teams and business must be clear what outputs they need before they build. The desired output can drastically alter any data or AI product requirements and development time.

The modalities you put into model, and the output you want matter. Otherwise, you risk building a very expensive paperweight.

What are the (Potential) Ways Can Multimodal AI is Used?

We’ve covered what modality is and ways models can respond. But how do we apply this to use cases? The list of examples here could fill multiple articles, so let's boil it down to a few examples.

Ecommerce.

Multimodal AI can analyze customer interactions, sale history, and preferences. Based on this data, it can offer personalized product recommendations. This includes visual recommendations based on the style or color preferences seen in images or videos that the customer has interacted with. It holds great potential, since recommendation systems can be truly personalized.

Searching Using Video

Google's video-to-text research enhances accessibility. It converts video content to text. This benefits those with hearing impairments or a preference for reading. It provides richer data extraction by analyzing both spoken words and visual cues. This improves the searchability and organization of video content. It makes it easier to locate specific information. It aids in content localization and translation. This expands the reach to diverse linguistic and cultural audiences.

Translation and Publishing

Multimodal AI can transform manga translation. It enhances the accuracy and cultural nuance of translations. It speeds up the translation process, allowing quicker and lower cost international releases. The technology enables customization of content for different global audiences, considering cultural sensitivities. This makes manga more accessible and engaging for diverse readers around the world.

The common thread here is that multimodal AI enhances the diversity and application of products. Multimodal models expand the range of products and potential revenue streams. With curated data, this a powerful tool to enhance business models.

What are the Strengths and Challenges of Multimodal AI?

But like any other technology, there’s pros and cons. Let's start with some technical strengths. I will save the product aspect for another article, since this is an overview. Let’s go over the strengths first.

Enhanced Models. Multimodal learning for AI/ML expands the capabilities of a model. A model’s potential lies in the data sets and sources it uses. Multimodal AI leverages diverse data sources. This allows models to learn from a broader and more complex range of inputs. Rich data can help us gain a more comprehensive understanding of the use cases we are addressing.

LLM Resource Efficiency. Training LLMs from scratch requires substantial resources. This is especially true when one modality involves images or videos. This increases the resource consumption. Niche domains and small companies often need less data to fine-tune a multimodal model than a unimodal model, such as a language model. In these cases, the cost-effectiveness of using a multimodal model is higher.

Improved Predictive Power. Multimodal models are not only resource-efficient but can enhance predictive power. Traditional methods often rely on a single data type. Multimodal systems can provide more context which can lead to more precise predictions. If one form of data is unclear, other modalities can clarify. Fine-tuning a multimodal model can yield better results than a unimodal model under the same resource constraints.

These hold major promise. As LLMs and GenAI mature, it will lead to a wide range of applications. Especially if businesses and data teams can create narrow use cases. But you’re probably wondering what the challenges are.

Like unimodal or normal ML modeling, it all goes back to data and building processes:

Data integration and processing are complex. This is like orchestrating a symphony from different musical instruments. Each data type—text, images, and sounds—has unique nuances. This requires specialized processing methods. Crafting models to handle this is difficult. This is especially true when fusing together predictions from different modalities. Extracting meaningful insights from it is also complex.

Data collection and curation. The cleaner and more abundant the data, the better the model performs. Collecting certain types of data can be challenging. Data quality needs to align with each unique modality. This is especially true for data that needs to align with other modalities. To better use data during training, consider using aligned and unimodal data at the same time. Collecting aligned training data can be very difficult. Aligned data for images and text may be easier to collect. But collecting aligned data for other modalities may pose more challenges.

Modeling Modalities. Representing data from different modalities may need fusion. This is because they may represent the same concepts. In multimodal AI, matching different types of data together is tricky. Another major challenge is the transferability of models. Multimodal models trained one data set may not perform well on another data set. These are complex issues we need to address before we build these.

Final Thoughts

I’d like to emphasize that this is overview of multimodal AI for the curious beginner. This article by no means gives a comprehensive or complete overview. Or how to implement it for that matter.

Multimodal AI is still in its early stages as a product. While we are using it with tools like GPT4, large scale implementation still faces many challenges from annotating multimodal data to cross-modal transformation. The research community and industry will be constantly looking for new ways to use technology and explore new ideas.

Despite the many challenging questions, multimodal learning will be more common in our daily lives. As data science and product teams become more familiar with the product and development processes, we can expect to see more innovative and practical applications of multimodal AI.

I’ll be doing some follow up articles on this topic, so let me know what you want to learn in the comments.